Access Local Docker Containers by Domain Names instead of Ports

Jones-Glotfelty Shipping Container House, Flagstaff AZ by Glamour Schatz is licensed under CC BY

Avoiding Ports when Accessing Local Docker Containers

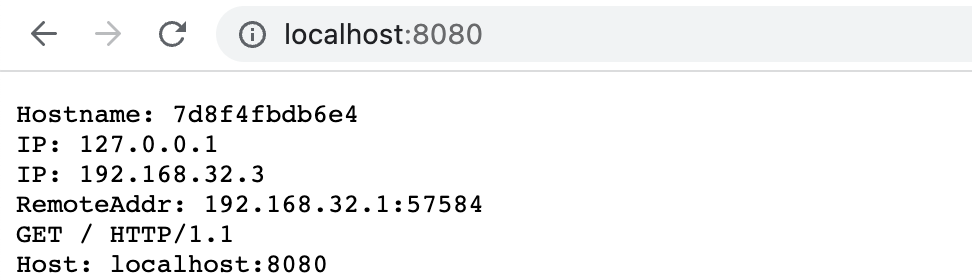

When using Docker during development, you’ll expose your application on localhost. Typically, you simply forward the exposed port from the application to the host machine. This allows you to access it via localhost:8080 (based on whatever port you specified in the configuration).

I like to have nice vanity URLs for my local applications like whoami-one.localhost and avoid using ports. I’ll demonstrate an easy way to get them using nginxproxy/nginx-proxy.

It can be error-prone remembering what port corresponds to what application during development.

The Setup

I’m using Docker Compose, but in theory, you can do this with just docker commands as well.

The core of the solution involves using nginxproxy/nginx-proxy, which generates reverse proxy configurations for nginx (and reloads it) when containers are started/stopped.

In the following snippet, you’ll see how I’m specifying two services: nginx-proxy and whoami-one. The whoami-one service is just for demonstration purposes, but ideally is an application you are developing. The VIRTUAL_HOST environment variable specifies the domain name that you will use to reach that service.

version: '3'

services:

nginx-proxy:

image: nginxproxy/nginx-proxy

ports:

- '80:80'

volumes:

- /var/run/docker.sock:/tmp/docker.sock:ro

whoami-one:

image: containous/whoami

environment:

- VIRTUAL_HOST=whoami-one.localhost

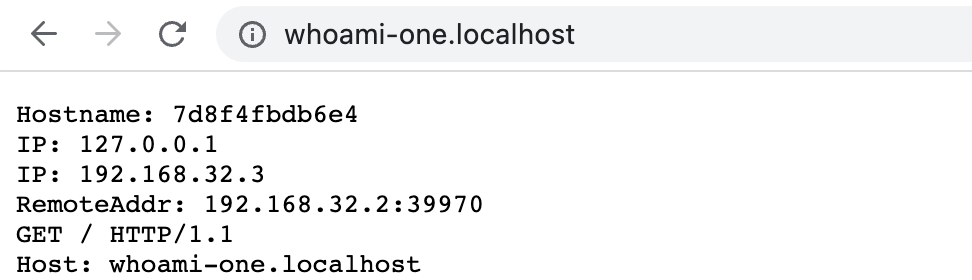

Now go and visit whoami-one.localhost and you should see it all work!

Note: I’ve used localhost as the Top-Level-Domain (TLD) as it is reserved and avoids conflicts. I also use Chrome as my browser, which I believe automatically directs .localhost domains to localhost – otherwise you’d have to do a bit more work to get that TLD to work.

Multiple Services and Projects

One issue with the above solution is that it doesn’t work across services between different projects. Each docker compose sets up a default network that includes the name of the current directory. We can instead specify a consistent docker network to work around this limitation.

First, we’ll specify just the nginx-proxy container, but with a default named network. This makes it so all containers on this network can communicate.

version: '3'

services:

nginx-proxy:

image: nginxproxy/nginx-proxy

ports:

- '80:80'

volumes:

- /var/run/docker.sock:/tmp/docker.sock:ro

networks:

default:

name: local-network

Now, we can make a new whoami project (in a different directory) belonging to the same network that we defined for the nginx-proxy. This allows the two containers to see each other and we can visit whoami.localhost with no problems

version: '3'

services:

whoami:

image: containous/whoami

environment:

- VIRTUAL_HOST=whoami.localhost

networks:

default:

name: local-network

Overall, I enjoy using this to help differentiate local Docker containers using domain names instead of ports.

Other Resources

- There are plenty of other options that

nginx-proxyhas, like SSL support. - This also isn’t a unique solution, as Traefik can also be used in a similar fashion.

- A throwback to an older project I made is Docker Compose DNS Consistency (DCDC), which provides consistent DNS resolution for services (not only for HTTP) internal and external to the Docker network.